PredictableContextPrior

Surface Reconstruction from Point Clouds by Learning Predictive Context Priors

CVPR 2022

Video

Abstract

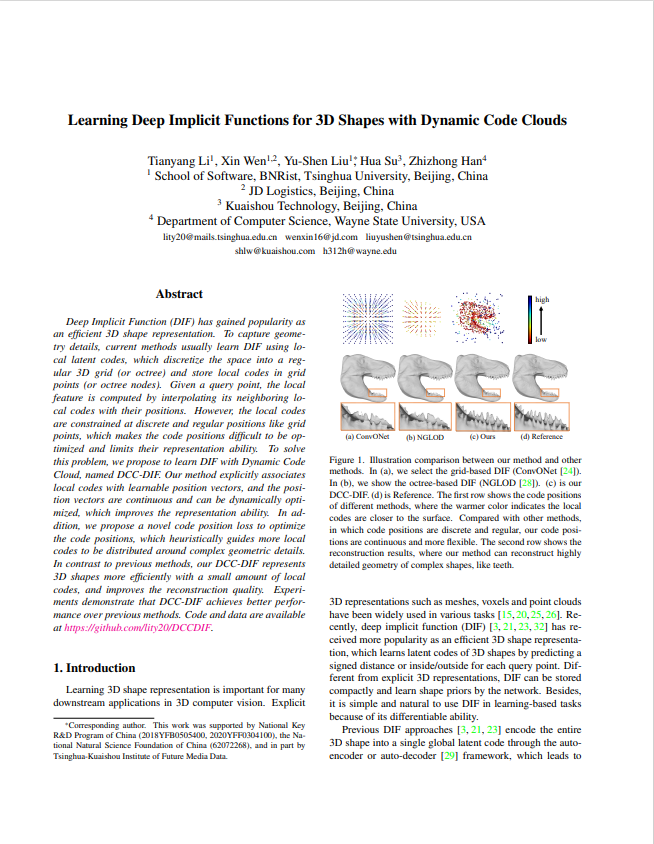

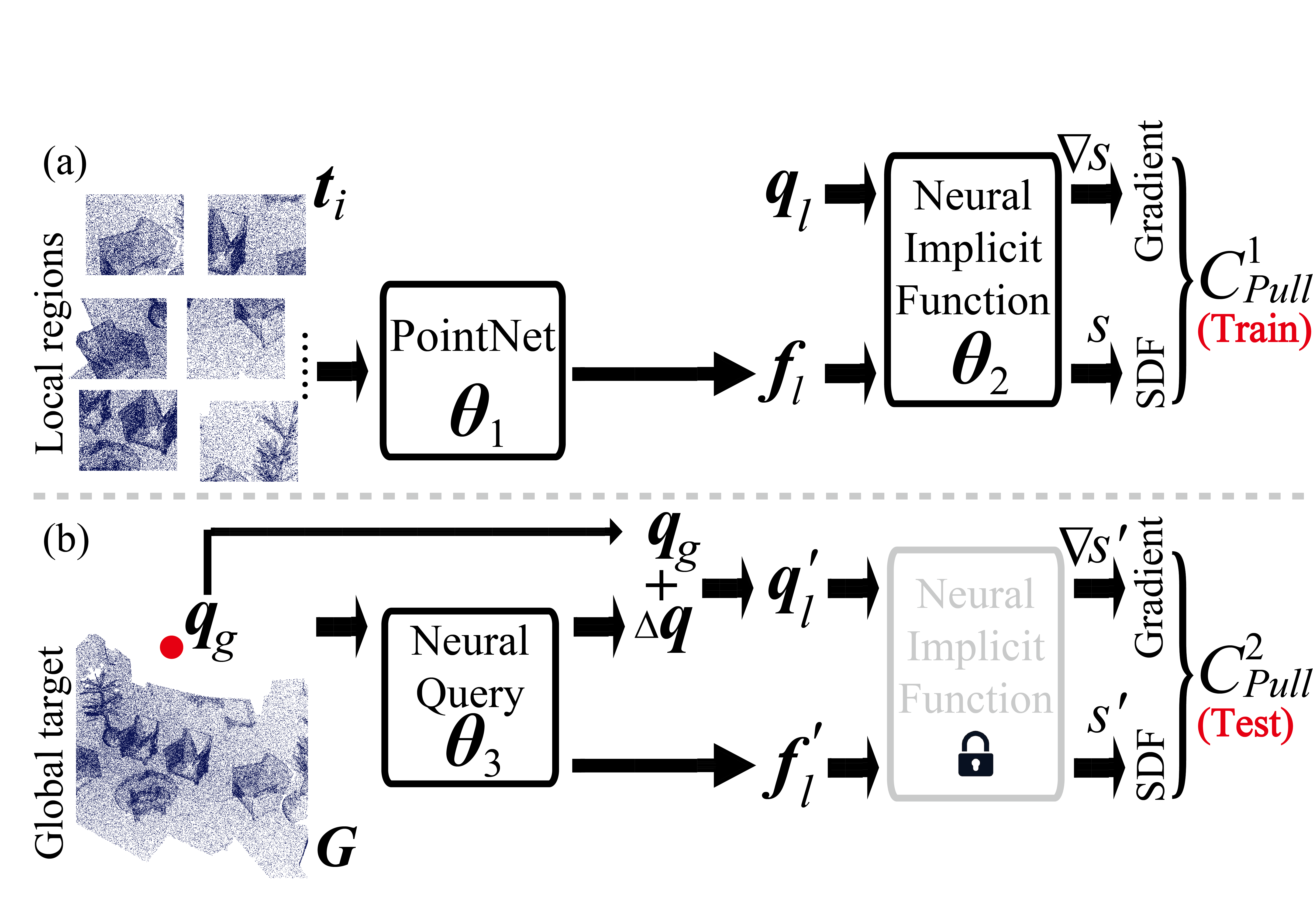

Surface reconstruction from point clouds is vital for 3D computer vision. State-of-the-art methods leverage large datasets to first learn local context priors that are represented as neural network-based signed distance functions (SDFs) with some parameters encoding the local contexts. To reconstruct a surface at a specific query location at inference time, these methods then match the local reconstruction target by searching for the best match in the local prior space (by optimizing the parameters encoding the local context) at the given query location. However, this requires the local context prior to generalize to a wide variety of unseen target regions, which is hard to achieve. To resolve this issue, we introduce Predictive Context Priors by learning Predictive Queries for each specific point cloud at inference time. Specifically, we first train a local context prior using a large point cloud dataset similar to previous techniques. For surface reconstruction at inference time, however, we specialize the local context prior into our Predictive Context Prior by learning Predictive Queries, which predict adjusted spatial query locations as displacements of the original locations. This leads to a global SDF that fits the specific point cloud the best. Intuitively, the query prediction enables us to flexibly search the learned local context prior over the entire prior space, rather than being restricted to the fixed query locations, and this improves the generalizability. Our method does not require ground truth signed distances, normals, or any additional procedure of signed distance fusion across overlapping regions. Our experimental results in surface reconstruction for single shapes or complex scenes show significant improvements over the state-of-the-art under widely used benchmarks.

Results:

Visual comparison with Point2Surf [1] and Neural-pull [2] under ABC dataset.

| Point2Surf | Neural-pull | Ours |

|---|---|---|

|

|

|

|

|

|

|

|

We reconstruct highly accurate surfaces from 300K points under FAMOUS dataset.

| Liberty | Dragon |

|---|---|

|

|

|

Surface reconstruction of a real scanned scene with texture.

| Input raw point cloud scanned by LiDAR Sensors |

|---|

|

|

Citation

@inproceedings{PredictableContextPrior, title={Surface Reconstruction from Point Clouds by Learning Predictive Context Priors}, author={Baorui Ma and Yu-Shen Liu and Matthias Zwicker and Zhizhong Han}, booktitle={IEEE/CVF Conference on Computer Vision and Pattern Recognition}, year={2022} }

Related Work

[1] Philipp Erler, Paul Guerrero, Stefan Ohrhallinger, Niloy J. Mitra, and Michael Wimmer. Points2Surf: Learning implicit surfaces from point clouds. In European Conference on Computer Vision, 2020. 1, 2, 5, 6, 8. [2] Baorui Ma, Zhizhong Han, Yu-Shen Liu, Matthias Zwicker. Neural-Pull: Learning Signed Distance Functions from Point Clouds by Learning to Pull Space onto Surfaces. International Conference on Machine Learning (ICML), 2021, PMLR 139: 7246-7257. [3] Baorui Ma, Yu-Shen Liu, Zhizhong Han. Reconstructing Surfaces for Sparse Point Clouds with On-Surface Priors. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022.