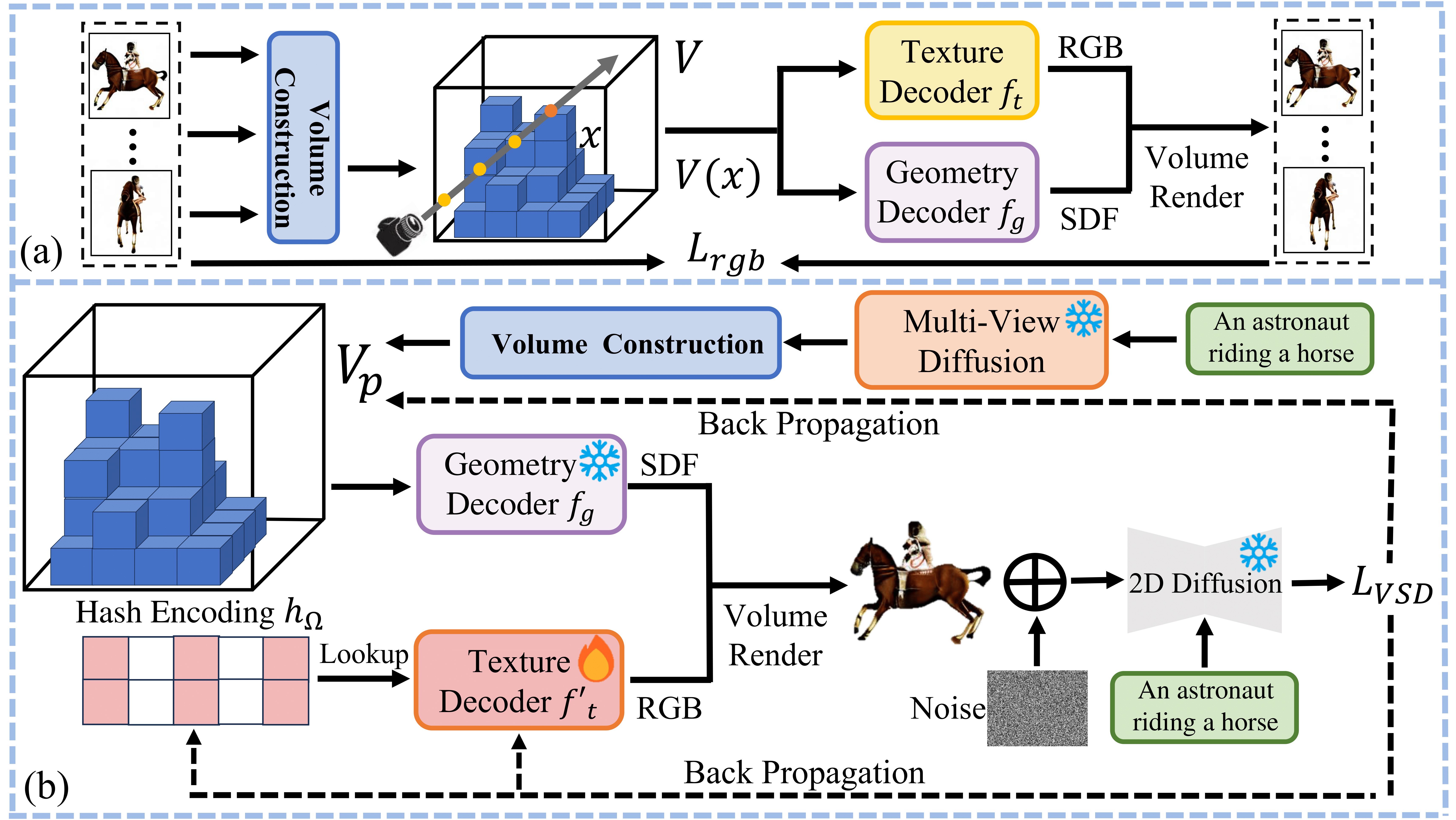

Method Overview

We focus on generating 3D content with consistently accurate geometry and delicate visual detail, by equipping 2D diffusion priors with the capability to produce 3D consistent geometry while retaining their generalizability. GeoDream consists of the following two stages. i) Following MVS-based methods, given multi-view images predicted by multi-view diffusion models, we construct cost volume as native 3D geometric priors within 2 minutes. ii) During priors refinement, we show that geometric priors can be further fine-tuned to boost rendering quality and geometric accuracy by combining a 2D diffusion model. We justify that disentangling 3D and 2D priors is a potentially exciting direction for maintaining both the generalization of 2D diffusion priors and the consistency of 3D priors.